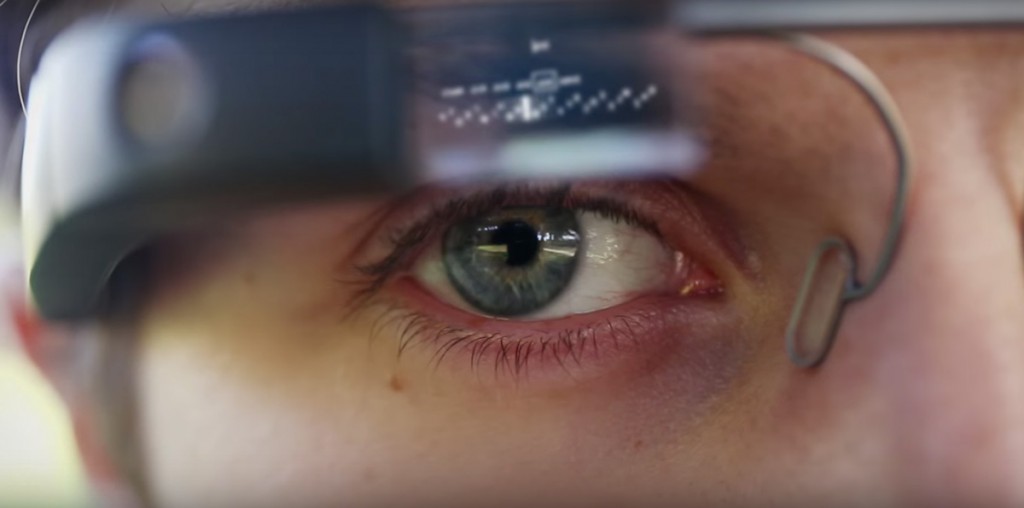

The first version of Google Glass was based on small layer in a corner of the eyes that gives a more direct access to informations of the phone. But augmented reality vision goes further than that. In addition to avoiding the use of hands, the Glass of the futur must give informations about the direct environment and become a new media for in many practices.

I – INTERACT WITH GLASS

The objective of Glass is to free the user’s hands, facilitate access to the information around him and keep him connected with his digital life. Today the interaction with Glass are essentially voice recognition with touch or the right side glass case. Some little movements such as wink and head shake are available for special features. These interactions are restrictive and don’t allow all actions.

The different ways to interact with Google Glass

Here, I describe the advantages and weaknesses of the different types of interactions.

a) Voice Recognition

| ADVANTAGES | WEAKNESSES |

|---|---|

| direct access | not discreet |

| avoid hands using | direct discussion VS glass commands (repetition not natural) |

| need to display each command lines if you don't know them by heart | |

| other person can command your voice with their voice |

Voice Recognition can’t be the only way of interaction.

recognition for each commands”

b) Hands Gestures

The gesture of the actual glass are efficient for the actual model. But it requires a real initiation to use them. It’s not as evident as using a smartphone. The project Soli can be an amazing tool and will aloud new kind of interactions.

| ADVANTAGES | WEAKNESSES |

|---|---|

| can be natural for some features | hands are not free… |

| can be very weird in the public area | |

| effort on the muscles & natural body postures |

In Minority Report, Tom Cruise needs a real knowledge to use his screen

c) Head shakes

d) Eyes focus

The eyes could work as a computer mouse. Target with the eyes and use a eyebrow movement to click.

| ADVANTAGES | WEAKNESSES |

|---|---|

| evident & direct | can strain the eyes |

| very good precision |

Even if the technologie is not effective yet, it is towards this direction that we must strive for the default interactions. The others senses can come as specific support or for complementary access.

The challenge to create interaction only based on eyes focus, generate some questions :

– How to navigate easily in the new system with only eyes ?

– How to use some commands with the eyes ? (ex: keyboard…)

– Is it a natural gesture ?

– Does it create ocular problems in the short / medium / long term ?

II – ARCHITECTURE

Even if this analysis needs lots of testing, I try here to have a reflection on a general architecture that could be part of basic guide lines for the development of future applications. In this example, I assume that the user has a glass on each eye and these glasses have the size of traditional glasses.

For this work, I chose a context of daily use : a sort of evolution of the first version Google Glass. This context is the most complex because he includes very diversified parameters management :

– indoor / outdoor

– mobility / passive

– multi-applications using at the same time

– obstacles

But i think the short guide lines are generic and opened and can be used in different contexts (professional and hobby using)

General layers architecture

1) Mobility & Visual zone of walk

If the user needs to use his Glass anytime, he need to have a safe visibility while walks. It is necessary to have a vision without barriers or visual noises on his way. For security reasons, it is important that this zone is restricted to blocking informations.

This zone can display blocking information such as long text (emails, news…) if the user is passive, if he doesn’t need to have a visibility in front of him. Theses cases appears when he is sat.

2) Augmented Reality & Passive Mode Layer

This zone covers all the glass visual field. It is the main layer. It can shows two types of informations depending of the mode of the Glass :

– in Walking mode : only augmented information directly connected with the reality nearby

– in passive mode : it can displays blocking informations, the type of informations that highly decreases the security of the user

The Glasses should be able to automatically detect if the user is in Walking Mode or Passive Mode.

Examples of apps we could use on this layer :

– geo-markers

– augmented artistic exhibitions

– games

– reading long information (emails, news)

– karaoke

– auto-translation

– record a video

– take a picture

3) Permanent informations layer

This informations are the information that we need to access very often such as date, hour, battery life, network signal and menu.

It’s a kind of mix with the main info of the status bar and the main apps of the actual smartphones.

![]()

4) Informations non bloquantes

These informations are all displayed on the side of the visual field and on the top of the walk visual field.

These informations are all the informations that can be displayed in support. The kind of informations you want or need to check often and that don’t block your walk sur as :

– short messages

– audio or video conference calls

– notifications (agenda, applications,….)

– map & GPS Navigation

– quick search results

– music management (in shuffle mode)

Allow full screen only when user is inactive

Lorsque l’utilisateur est arrêté, on peut lui donner l’opportunité de s’arrêter pour regarder.